智能体¶

回顾¶

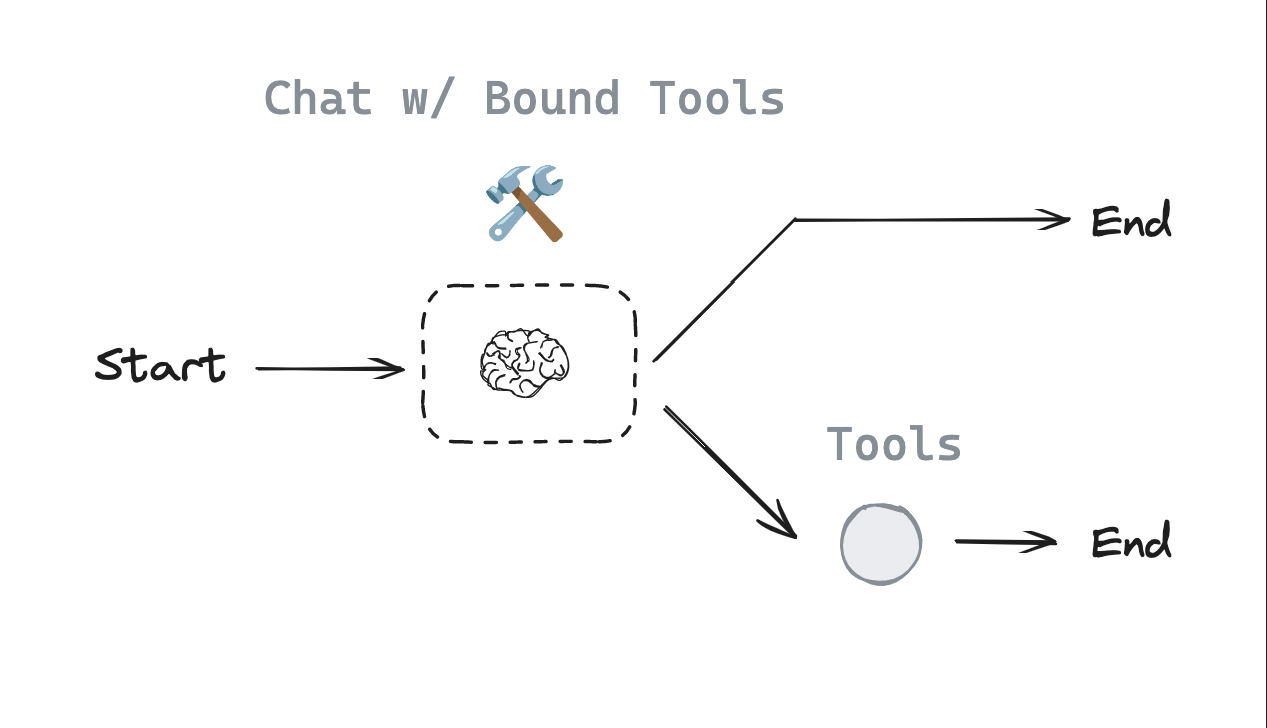

我们构建了一个路由机制。

- 聊天模型将根据用户输入决定是否调用工具

- 使用条件边(conditional edge)路由到相应节点:要么调用工具,要么直接结束流程

目标¶

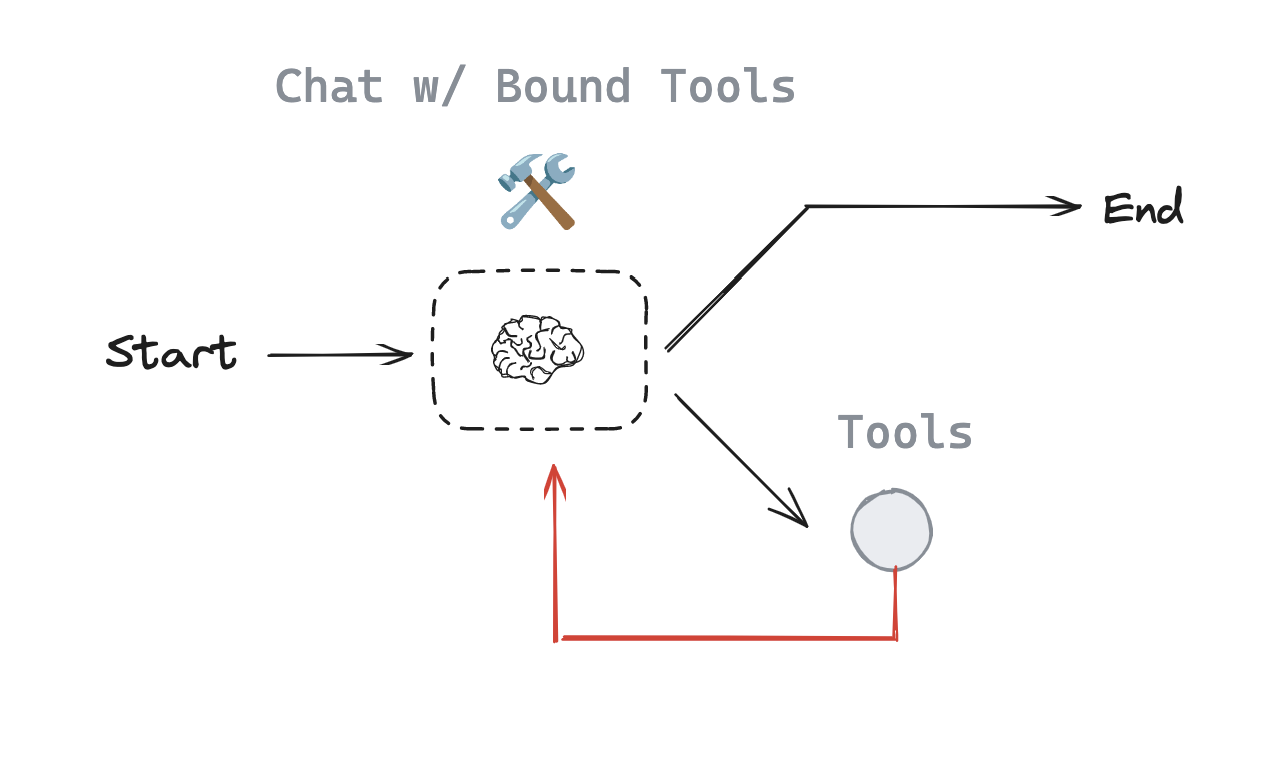

现在我们可以将其扩展为通用智能体架构。

在上述路由机制中,我们调用模型后,如果它选择调用工具,就会向用户返回一个ToolMessage。

但如果我们将这个ToolMessage重新传回模型会怎样?

我们可以让模型选择:(1)继续调用其他工具,或(2)直接响应。

这就是ReAct通用智能体架构的设计理念:

行动(act)—— 让模型调用特定工具观察(observe)—— 将工具输出传回模型推理(reason)—— 让模型根据工具输出决定后续操作(例如继续调用工具或直接响应)

这种通用架构可应用于多种工具类型。

In [ ]:

Copied!

%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

In [2]:

Copied!

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

In [3]:

Copied!

_set_env("LANGSMITH_API_KEY")

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["LANGSMITH_PROJECT"] = "langchain-academy"

_set_env("LANGSMITH_API_KEY")

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["LANGSMITH_PROJECT"] = "langchain-academy"

In [4]:

Copied!

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a and b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

# For this ipynb we set parallel tool calling to false as math generally is done sequentially, and this time we have 3 tools that can do math

# the OpenAI model specifically defaults to parallel tool calling for efficiency, see https://python.langchain.com/docs/how_to/tool_calling_parallel/

# play around with it and see how the model behaves with math equations!

llm_with_tools = llm.bind_tools(tools, parallel_tool_calls=False)

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a and b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

# For this ipynb we set parallel tool calling to false as math generally is done sequentially, and this time we have 3 tools that can do math

# the OpenAI model specifically defaults to parallel tool calling for efficiency, see https://python.langchain.com/docs/how_to/tool_calling_parallel/

# play around with it and see how the model behaves with math equations!

llm_with_tools = llm.bind_tools(tools, parallel_tool_calls=False)

让我们创建自己的LLM,并用期望的智能体整体行为来提示它。

In [5]:

Copied!

from langgraph.graph import MessagesState

from langchain_core.messages import HumanMessage, SystemMessage

# System message

sys_msg = SystemMessage(content="You are a helpful assistant tasked with performing arithmetic on a set of inputs.")

# Node

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

from langgraph.graph import MessagesState

from langchain_core.messages import HumanMessage, SystemMessage

# System message

sys_msg = SystemMessage(content="You are a helpful assistant tasked with performing arithmetic on a set of inputs.")

# Node

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

与之前一样,我们使用 MessagesState 并定义一个包含工具列表的 Tools 节点。

Assistant 节点即绑定工具的模型。

我们创建一个包含 Assistant 和 Tools 节点的流程图。

添加 tools_condition 边缘路由,根据 Assistant 是否调用工具来决定流向 End 或 Tools。

现在新增一个步骤:

将 Tools 节点回连至 Assistant,形成循环结构。

- 当

assistant节点执行后,tools_condition会检查模型输出是否为工具调用 - 如果是工具调用,流程将导向

tools节点 tools节点会重新连接回assistant- 只要模型持续调用工具,该循环就会继续

- 若模型响应非工具调用,流程将导向 END 终止处理过程

In [6]:

Copied!

from langgraph.graph import START, StateGraph

from langgraph.prebuilt import tools_condition

from langgraph.prebuilt import ToolNode

from IPython.display import Image, display

# Graph

builder = StateGraph(MessagesState)

# Define nodes: these do the work

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

# Define edges: these determine how the control flow moves

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", "assistant")

react_graph = builder.compile()

# Show

display(Image(react_graph.get_graph(xray=True).draw_mermaid_png()))

from langgraph.graph import START, StateGraph

from langgraph.prebuilt import tools_condition

from langgraph.prebuilt import ToolNode

from IPython.display import Image, display

# Graph

builder = StateGraph(MessagesState)

# Define nodes: these do the work

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

# Define edges: these determine how the control flow moves

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", "assistant")

react_graph = builder.compile()

# Show

display(Image(react_graph.get_graph(xray=True).draw_mermaid_png()))

In [11]:

Copied!

messages = [HumanMessage(content="Add 3 and 4. Multiply the output by 2. Divide the output by 5")]

messages = react_graph.invoke({"messages": messages})

messages = [HumanMessage(content="Add 3 and 4. Multiply the output by 2. Divide the output by 5")]

messages = react_graph.invoke({"messages": messages})

In [12]:

Copied!

for m in messages['messages']:

m.pretty_print()

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Add 3 and 4. Multiply the output by 2. Divide the output by 5 ================================== Ai Message ================================== Tool Calls: add (call_i8zDfMTdvmIG34w4VBA3m93Z) Call ID: call_i8zDfMTdvmIG34w4VBA3m93Z Args: a: 3 b: 4 ================================= Tool Message ================================= Name: add 7 ================================== Ai Message ================================== Tool Calls: multiply (call_nE62D40lrGQC7b67nVOzqGYY) Call ID: call_nE62D40lrGQC7b67nVOzqGYY Args: a: 7 b: 2 ================================= Tool Message ================================= Name: multiply 14 ================================== Ai Message ================================== Tool Calls: divide (call_6Q9SjxD2VnYJqEBXFt7O1moe) Call ID: call_6Q9SjxD2VnYJqEBXFt7O1moe Args: a: 14 b: 5 ================================= Tool Message ================================= Name: divide 2.8 ================================== Ai Message ================================== The final result after performing the operations \( (3 + 4) \times 2 \div 5 \) is 2.8.

LangSmith¶

我们可以在 LangSmith 中查看追踪记录。