In [ ]:

Copied!

%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

In [1]:

Copied!

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

In [2]:

Copied!

_set_env("LANGSMITH_API_KEY")

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["LANGSMITH_PROJECT"] = "langchain-academy"

_set_env("LANGSMITH_API_KEY")

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["LANGSMITH_PROJECT"] = "langchain-academy"

这延续了我们之前的做法。

In [3]:

Copied!

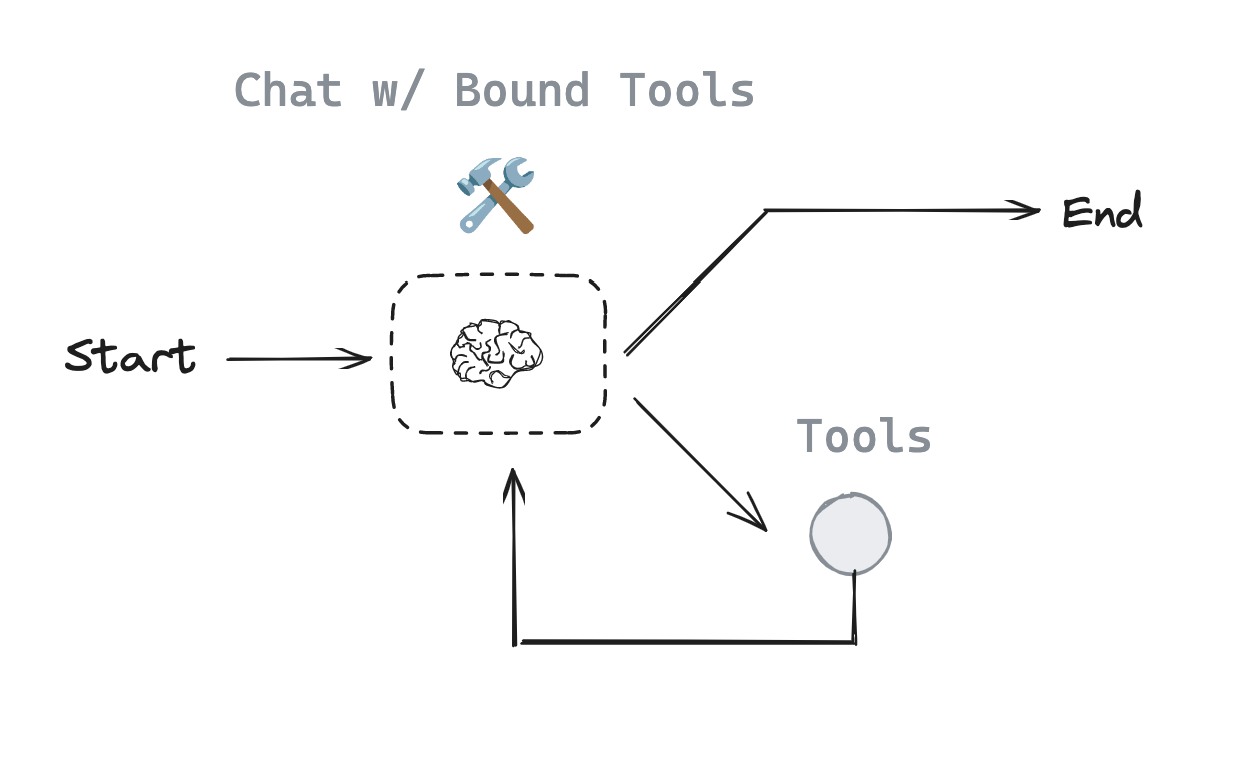

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a and b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools(tools)

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a and b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools(tools)

In [4]:

Copied!

from langgraph.graph import MessagesState

from langchain_core.messages import HumanMessage, SystemMessage

# System message

sys_msg = SystemMessage(content="You are a helpful assistant tasked with performing arithmetic on a set of inputs.")

# Node

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

from langgraph.graph import MessagesState

from langchain_core.messages import HumanMessage, SystemMessage

# System message

sys_msg = SystemMessage(content="You are a helpful assistant tasked with performing arithmetic on a set of inputs.")

# Node

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

In [5]:

Copied!

from langgraph.graph import START, StateGraph

from langgraph.prebuilt import tools_condition, ToolNode

from IPython.display import Image, display

# Graph

builder = StateGraph(MessagesState)

# Define nodes: these do the work

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

# Define edges: these determine how the control flow moves

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", "assistant")

react_graph = builder.compile()

# Show

display(Image(react_graph.get_graph(xray=True).draw_mermaid_png()))

from langgraph.graph import START, StateGraph

from langgraph.prebuilt import tools_condition, ToolNode

from IPython.display import Image, display

# Graph

builder = StateGraph(MessagesState)

# Define nodes: these do the work

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

# Define edges: these determine how the control flow moves

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", "assistant")

react_graph = builder.compile()

# Show

display(Image(react_graph.get_graph(xray=True).draw_mermaid_png()))

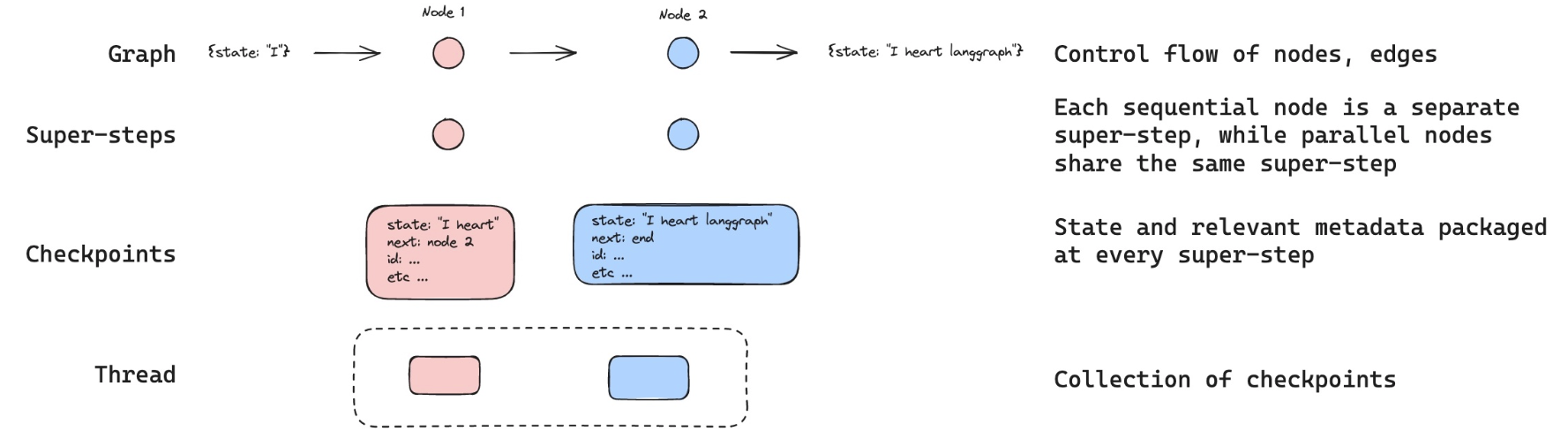

内存¶

让我们像之前一样运行我们的代理程序。

In [6]:

Copied!

messages = [HumanMessage(content="Add 3 and 4.")]

messages = react_graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()

messages = [HumanMessage(content="Add 3 and 4.")]

messages = react_graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Add 3 and 4. ================================== Ai Message ================================== Tool Calls: add (call_zZ4JPASfUinchT8wOqg9hCZO) Call ID: call_zZ4JPASfUinchT8wOqg9hCZO Args: a: 3 b: 4 ================================= Tool Message ================================= Name: add 7 ================================== Ai Message ================================== The sum of 3 and 4 is 7.

现在,让我们乘以 2!

In [7]:

Copied!

messages = [HumanMessage(content="Multiply that by 2.")]

messages = react_graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()

messages = [HumanMessage(content="Multiply that by 2.")]

messages = react_graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Multiply that by 2. ================================== Ai Message ================================== Tool Calls: multiply (call_prnkuG7OYQtbrtVQmH2d3Nl7) Call ID: call_prnkuG7OYQtbrtVQmH2d3Nl7 Args: a: 2 b: 2 ================================= Tool Message ================================= Name: multiply 4 ================================== Ai Message ================================== The result of multiplying 2 by 2 is 4.

In [8]:

Copied!

from langgraph.checkpoint.memory import MemorySaver

memory = MemorySaver()

react_graph_memory = builder.compile(checkpointer=memory)

from langgraph.checkpoint.memory import MemorySaver

memory = MemorySaver()

react_graph_memory = builder.compile(checkpointer=memory)

使用内存时,我们需要指定一个 thread_id。

这个 thread_id 将存储我们的图状态集合。

以下是示意图:

- 检查点程序会在图的每个步骤写入状态

- 这些检查点保存在一个线程中

- 未来我们可以通过

thread_id访问该线程

In [9]:

Copied!

# Specify a thread

config = {"configurable": {"thread_id": "1"}}

# Specify an input

messages = [HumanMessage(content="Add 3 and 4.")]

# Run

messages = react_graph_memory.invoke({"messages": messages},config)

for m in messages['messages']:

m.pretty_print()

# Specify a thread

config = {"configurable": {"thread_id": "1"}}

# Specify an input

messages = [HumanMessage(content="Add 3 and 4.")]

# Run

messages = react_graph_memory.invoke({"messages": messages},config)

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Add 3 and 4. ================================== Ai Message ================================== Tool Calls: add (call_MSupVAgej4PShIZs7NXOE6En) Call ID: call_MSupVAgej4PShIZs7NXOE6En Args: a: 3 b: 4 ================================= Tool Message ================================= Name: add 7 ================================== Ai Message ================================== The sum of 3 and 4 is 7.

如果我们传递相同的 thread_id,就可以从之前记录的状态检查点继续执行!

在这种情况下,上述对话内容会被记录在该线程中。

我们传递的 HumanMessage("Multiply that by 2.")会追加到上述对话之后。

因此,模型现在知道 that 指的是 The sum of 3 and 4 is 7.。

In [10]:

Copied!

messages = [HumanMessage(content="Multiply that by 2.")]

messages = react_graph_memory.invoke({"messages": messages}, config)

for m in messages['messages']:

m.pretty_print()

messages = [HumanMessage(content="Multiply that by 2.")]

messages = react_graph_memory.invoke({"messages": messages}, config)

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Add 3 and 4. ================================== Ai Message ================================== Tool Calls: add (call_MSupVAgej4PShIZs7NXOE6En) Call ID: call_MSupVAgej4PShIZs7NXOE6En Args: a: 3 b: 4 ================================= Tool Message ================================= Name: add 7 ================================== Ai Message ================================== The sum of 3 and 4 is 7. ================================ Human Message ================================= Multiply that by 2. ================================== Ai Message ================================== Tool Calls: multiply (call_fWN7lnSZZm82tAg7RGeuWusO) Call ID: call_fWN7lnSZZm82tAg7RGeuWusO Args: a: 7 b: 2 ================================= Tool Message ================================= Name: multiply 14 ================================== Ai Message ================================== The result of multiplying 7 by 2 is 14.

In [ ]:

Copied!