%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph

消息¶

聊天模型可以使用 messages 来记录对话中的不同角色。

LangChain 支持多种消息类型,包括 HumanMessage(用户消息)、AIMessage(AI消息)、SystemMessage(系统消息)和 ToolMessage(工具调用消息)。

这些消息类型分别表示:来自用户的消息、来自聊天模型的响应、用于指导聊天模型行为的系统指令,以及来自工具调用的消息。

让我们创建一个消息列表。

每条消息可以包含以下内容:

content- 消息正文内容name- 可选参数,消息发送者名称response_metadata- 可选参数,元数据字典(例如通常由模型提供方为AIMessages填充的元数据)

from pprint import pprint

from langchain_core.messages import AIMessage, HumanMessage

messages = [AIMessage(content=f"So you said you were researching ocean mammals?", name="Model")]

messages.append(HumanMessage(content=f"Yes, that's right.",name="Lance"))

messages.append(AIMessage(content=f"Great, what would you like to learn about.", name="Model"))

messages.append(HumanMessage(content=f"I want to learn about the best place to see Orcas in the US.", name="Lance"))

for m in messages:

m.pretty_print()

================================== Ai Message ================================== Name: Model So you said you were researching ocean mammals? ================================ Human Message ================================= Name: Lance Yes, that's right. ================================== Ai Message ================================== Name: Model Great, what would you like to learn about. ================================ Human Message ================================= Name: Lance I want to learn about the best place to see Orcas in the US.

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

我们可以加载一个聊天模型,并用我们的消息列表来调用它。

可以看到结果是一个带有特定 response_metadata 的 AIMessage。

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-4o")

result = llm.invoke(messages)

type(result)

langchain_core.messages.ai.AIMessage

result

AIMessage(content='One of the best places to see orcas in the United States is the Pacific Northwest, particularly around the San Juan Islands in Washington State. Here are some details:\n\n1. **San Juan Islands, Washington**: These islands are a renowned spot for whale watching, with orcas frequently spotted between late spring and early fall. The waters around the San Juan Islands are home to both resident and transient orca pods, making it an excellent location for sightings.\n\n2. **Puget Sound, Washington**: This area, including places like Seattle and the surrounding waters, offers additional opportunities to see orcas, particularly the Southern Resident killer whale population.\n\n3. **Olympic National Park, Washington**: The coastal areas of the park provide a stunning backdrop for spotting orcas, especially during their migration periods.\n\nWhen planning a trip for whale watching, consider peak seasons for orca activity and book tours with reputable operators who adhere to responsible wildlife viewing practices. Additionally, land-based spots like Lime Kiln Point State Park, also known as “Whale Watch Park,” on San Juan Island, offer great opportunities for orca watching from shore.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 228, 'prompt_tokens': 67, 'total_tokens': 295, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_50cad350e4', 'finish_reason': 'stop', 'logprobs': None}, id='run-57ed2891-c426-4452-b44b-15d0a5c3f225-0', usage_metadata={'input_tokens': 67, 'output_tokens': 228, 'total_tokens': 295, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})

result.response_metadata

{'token_usage': {'completion_tokens': 228,

'prompt_tokens': 67,

'total_tokens': 295,

'completion_tokens_details': {'accepted_prediction_tokens': 0,

'audio_tokens': 0,

'reasoning_tokens': 0,

'rejected_prediction_tokens': 0},

'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}},

'model_name': 'gpt-4o-2024-08-06',

'system_fingerprint': 'fp_50cad350e4',

'finish_reason': 'stop',

'logprobs': None}

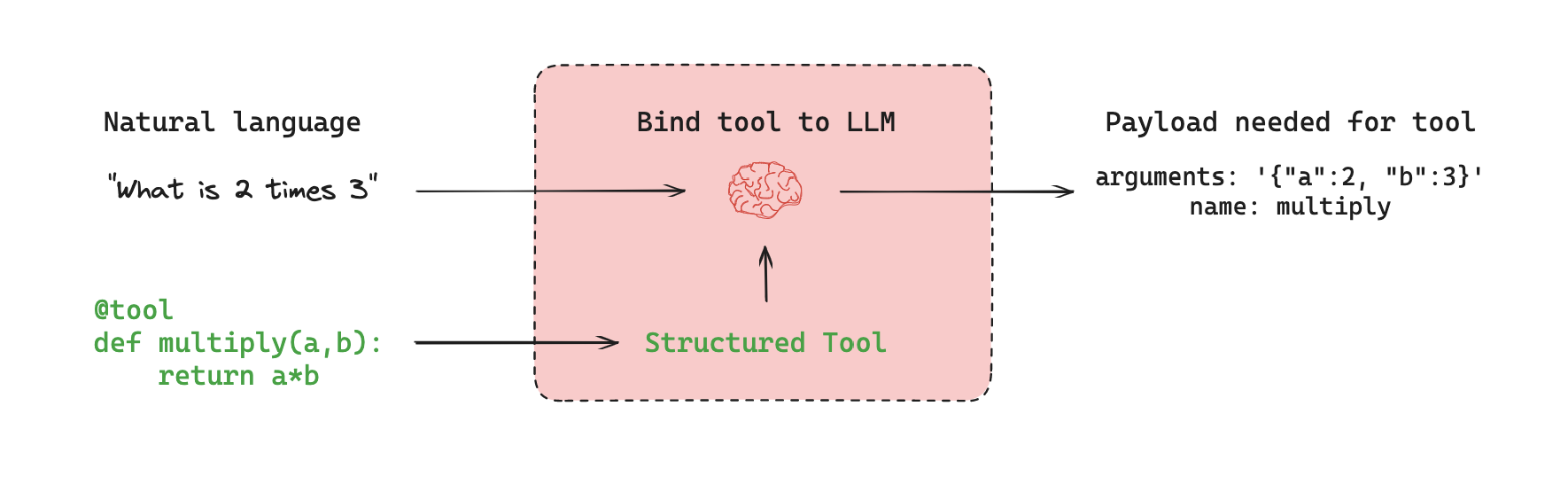

工具¶

当需要让模型与外部系统交互时,工具就显得尤为重要。

外部系统(如API)通常要求特定的输入格式或数据结构,而非自然语言。例如,当我们以工具形式绑定某个API时,实际上是为模型注入了对所需输入格式的认知能力。

模型会根据用户的自然语言输入,智能选择是否调用工具。调用时,模型将返回符合工具规范的结构化输出。

许多大语言模型供应商都支持工具调用功能,而LangChain中的工具调用接口设计得十分简洁。您只需将任意Python函数传入ChatModel.bind_tools(function)即可实现绑定。

让我们展示一个工具调用的简单示例!

multiply 函数就是我们的工具。

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

llm_with_tools = llm.bind_tools([multiply])

如果我们传入一个输入——例如 "What is 2 multiplied by 3"——会返回一个工具调用。

该工具调用包含与函数输入模式匹配的特定参数,以及要调用的函数名称。

{'arguments': '{"a":2,"b":3}', 'name': 'multiply'}

tool_call = llm_with_tools.invoke([HumanMessage(content=f"What is 2 multiplied by 3", name="Lance")])

tool_call.tool_calls

[{'name': 'multiply',

'args': {'a': 2, 'b': 3},

'id': 'call_lBBBNo5oYpHGRqwxNaNRbsiT',

'type': 'tool_call'}]

from typing_extensions import TypedDict

from langchain_core.messages import AnyMessage

class MessagesState(TypedDict):

messages: list[AnyMessage]

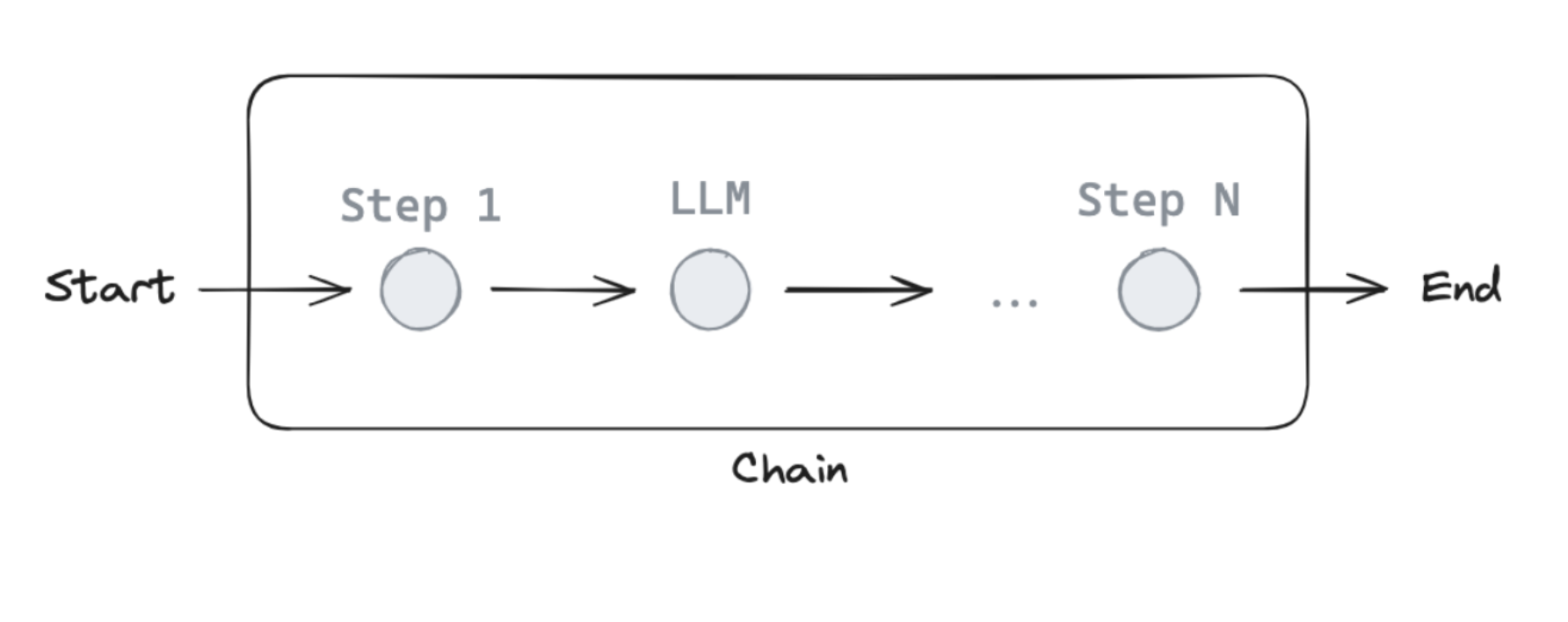

归约器(Reducers)¶

现在,我们遇到一个小问题!

正如之前讨论的,每个节点都会为我们的状态键 messages 返回一个新值。

但这个新值会覆盖之前 messages 的值。

在图表运行过程中,我们希望能将消息追加到 messages 状态键中。

这时可以使用归约函数来解决这个问题。

归约器允许我们指定状态更新的方式。

如果没有指定归约函数,则默认对该键的更新会执行覆盖操作(正如我们之前看到的那样)。

但若要追加消息,我们可以使用预置的 add_messages 归约器。

这能确保所有消息都会被追加到现有的消息列表中。

我们只需在 messages 键的元数据中标注 add_messages 归约函数即可。

from typing import Annotated

from langgraph.graph.message import add_messages

class MessagesState(TypedDict):

messages: Annotated[list[AnyMessage], add_messages]

由于在图状态中维护消息列表是非常常见的需求,LangGraph 提供了一个预构建的 MessagesState!

MessagesState 的定义如下:

- 预置了单一的

messages键 - 该键对应的是

AnyMessage对象列表 - 使用

add_messages归约器

我们通常会选择使用 MessagesState,因为它比上面展示的自定义 TypedDict 方式更加简洁。

from langgraph.graph import MessagesState

class MessagesState(MessagesState):

# Add any keys needed beyond messages, which is pre-built

pass

为了更深入地理解,我们可以单独看看 add_messages 这个 reducer 是如何工作的。

# Initial state

initial_messages = [AIMessage(content="Hello! How can I assist you?", name="Model"),

HumanMessage(content="I'm looking for information on marine biology.", name="Lance")

]

# New message to add

new_message = AIMessage(content="Sure, I can help with that. What specifically are you interested in?", name="Model")

# Test

add_messages(initial_messages , new_message)

[AIMessage(content='Hello! How can I assist you?', name='Model', id='cd566566-0f42-46a4-b374-fe4d4770ffa7'), HumanMessage(content="I'm looking for information on marine biology.", name='Lance', id='9b6c4ddb-9de3-4089-8d22-077f53e7e915'), AIMessage(content='Sure, I can help with that. What specifically are you interested in?', name='Model', id='74a549aa-8b8b-48d4-bdf1-12e98404e44e')]

我们的关系图¶

现在,让我们在关系图中使用 MessagesState。

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

# Node

def tool_calling_llm(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# Build graph

builder = StateGraph(MessagesState)

builder.add_node("tool_calling_llm", tool_calling_llm)

builder.add_edge(START, "tool_calling_llm")

builder.add_edge("tool_calling_llm", END)

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

如果我们传入 Hello!,LLM 会直接响应而不调用任何工具。

messages = graph.invoke({"messages": HumanMessage(content="Hello!")})

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Hello! ================================== Ai Message ================================== Hi there! How can I assist you today?

当LLM判定输入或任务需要某个工具提供的功能时,它会选择使用该工具。

messages = graph.invoke({"messages": HumanMessage(content="Multiply 2 and 3")})

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Multiply 2 and 3! ================================== Ai Message ================================== Tool Calls: multiply (call_Er4gChFoSGzU7lsuaGzfSGTQ) Call ID: call_Er4gChFoSGzU7lsuaGzfSGTQ Args: a: 2 b: 3