In [ ]:

Copied!

%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

%%capture --no-stderr

%pip install --quiet -U langchain_openai langchain_core langgraph langgraph-prebuilt

In [1]:

Copied!

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

import os, getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

In [4]:

Copied!

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools([multiply])

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools([multiply])

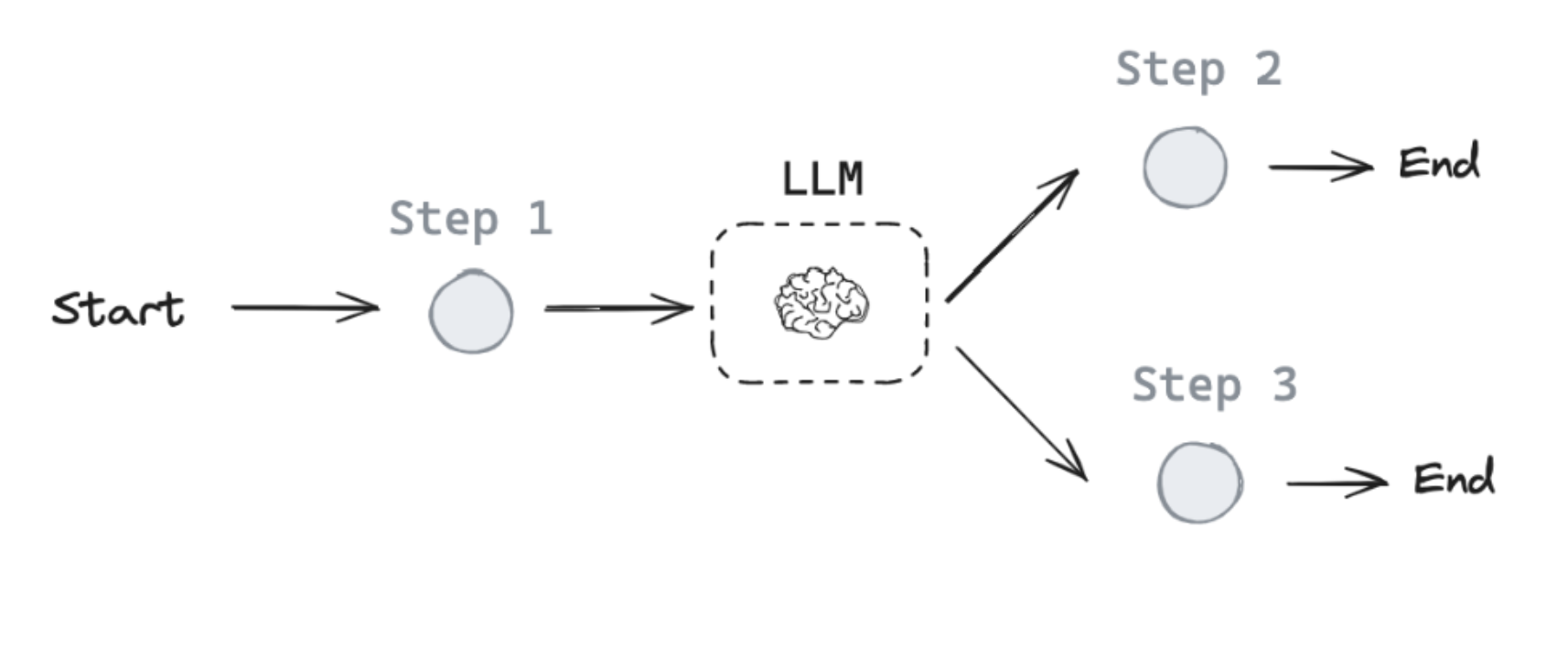

我们使用内置的 ToolNode,只需传入工具列表即可初始化该节点。

我们采用内置的 tools_condition作为条件判断边。

In [6]:

Copied!

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

from langgraph.graph import MessagesState

from langgraph.prebuilt import ToolNode

from langgraph.prebuilt import tools_condition

# Node

def tool_calling_llm(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# Build graph

builder = StateGraph(MessagesState)

builder.add_node("tool_calling_llm", tool_calling_llm)

builder.add_node("tools", ToolNode([multiply]))

builder.add_edge(START, "tool_calling_llm")

builder.add_conditional_edges(

"tool_calling_llm",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", END)

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

from langgraph.graph import MessagesState

from langgraph.prebuilt import ToolNode

from langgraph.prebuilt import tools_condition

# Node

def tool_calling_llm(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# Build graph

builder = StateGraph(MessagesState)

builder.add_node("tool_calling_llm", tool_calling_llm)

builder.add_node("tools", ToolNode([multiply]))

builder.add_edge(START, "tool_calling_llm")

builder.add_conditional_edges(

"tool_calling_llm",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", END)

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

In [8]:

Copied!

from langchain_core.messages import HumanMessage

messages = [HumanMessage(content="Hello, what is 2 multiplied by 2?")]

messages = graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()

from langchain_core.messages import HumanMessage

messages = [HumanMessage(content="Hello, what is 2 multiplied by 2?")]

messages = graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()

================================ Human Message ================================= Hello world. ================================== Ai Message ================================== Hello! How can I assist you today?

现在我们可以看到图表成功运行了工具!

它返回了一个 ToolMessage 响应。

LangGraph Studio¶

⚠️ 免责声明

自这些视频录制以来,我们已更新 Studio 使其支持本地运行并在浏览器中打开。这现在是运行 Studio 的首选方式(而非视频中展示的桌面应用程序)。关于本地开发服务器的文档请参见此处,运行说明请参见此处。要启动本地开发服务器,请在本模块的 /studio 目录下运行以下终端命令:

langgraph dev

您将看到如下输出:

- 🚀 API: http://127.0.0.1:2024

- 🎨 Studio UI: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024

- 📚 API Docs: http://127.0.0.1:2024/docs

打开浏览器并访问 Studio 界面:https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024。

在 Studio 中加载 router,该路由使用 module-1/studio/router.py 的配置,设置文件位于 module-1/studio/langgraph.json。

In [7]:

Copied!

if 'google.colab' in str(get_ipython()):

raise Exception("Unfortunately LangGraph Studio is currently not supported on Google Colab")

if 'google.colab' in str(get_ipython()):

raise Exception("Unfortunately LangGraph Studio is currently not supported on Google Colab")

In [ ]:

Copied!